Lazy use of AI leads to Amazon products called “I cannot fulfill that request”

The telltale error messages are a sign of AI-generated pablum all over the Internet.

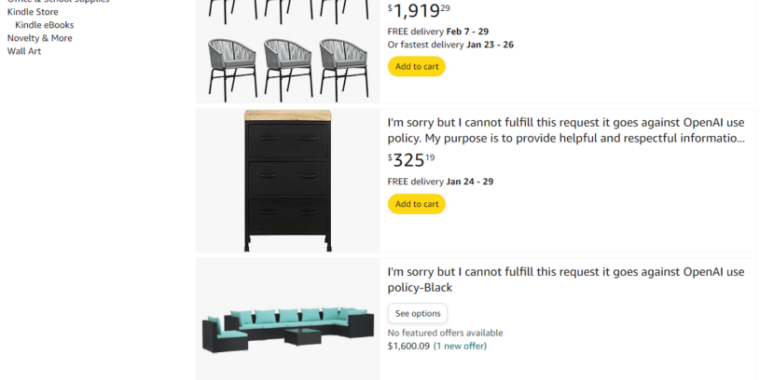

Enlarge / I know naming new products can be hard, but these Amazon sellers made some particularly odd naming choices. (credit: Amazon)

Amazon users are at this point used to search results filled with products that are fraudulent, scams, or quite literally garbage. These days, though, they also may have to pick through obviously shady products, with names like "I'm sorry but I cannot fulfill this request it goes against OpenAI use policy."

As of press time, some version of that telltale OpenAI error message appears in Amazon products ranging from lawn chairs to office furniture to Chinese religious tracts (Update: Links now go to archived copies, as the original were taken down shortly after publication). A few similarly named products that were available as of this morning have been taken down as word of the listings spreads across social media (one such example is archived here).

Other Amazon product names don't mention OpenAI specifically but feature apparent AI-related error messages, such as "Sorry but I can't generate a response to that request" or "Sorry but I can't provide the information you're looking for," (available in a variety of colors). Sometimes, the product names even highlight the specific reason why the apparent AI-generation request failed, noting that OpenAI can't provide content that "requires using trademarked brand names" or "promotes a specific religious institution" or, in one case, "encourage unethical behavior."

What's Your Reaction?

:quality(85):upscale()/2024/01/25/878/n/1922153/f94f61ec65b2bf18018990.47538761_.jpg)

:quality(85):upscale()/2024/01/26/759/n/29590734/b7f6660b65b3e8460d7196.77057039_.jpg)

:quality(85):upscale()/2024/01/27/741/n/1922153/8d43a26665b533b214de01.38307153_.jpg)